Probabilies & Statistics

- TODO:

@ - 《统计学习方法》

- k-d tree - Wikipedia, the free encyclopedia

- cart: classification and regression tree;, gini index

- MISC Notes

@ 一个分布有 mean,一个随机变量有 ev。如果这个随机变量 rv 分布为 x,则 rv 的 ev 就是 x 的 mean。

说 chi-squared 和 chi-square,都可以, 维基上是 chi-squared,用这个比较好。

全概率:原因 -> 结果

贝叶斯:结果 -> 原因

P(A|B) > P(A), P(A|B) = P(A), P(A|B) < P(A)

相关(线性相关)

binomial,

[baɪ'nomɪəl]bernoulli,

[bə:'nu:li]poisson,

/ˈpwɑːsɒn/deviation,

['divɪ'eʃən]cumulative,

['kjumjəletɪv]均方误差(mean square error)

homoscedasticity, homo-sce-das-ti-city, 方差齐性,

['hɔməusi,dæs'tisəti]fiducial,

[fɪ'djuːʃ(ə)l]bayesian,

['beʒən]conjugate,

['kɑndʒəɡet], 共轭的成对的;结合的;【化, 数, 物】共轭的;【语】同源 [根] 的 v.(根据数、人称、时态等)列举(动词)的变化形式

- 一些蛋疼的名词

@ An independent variable is also known as a “predictor variable”, “regressor”, “controlled variable”, “manipulated variable”, “explanatory variable”, “exposure variable” (see reliability theory), “risk factor” (see medical statistics), “feature” (in machine learning and pattern recognition) or an “input variable.”

A dependent variable is also known as a “response variable”, “regressand”, “predicted variable”, “measured variable”, “explained variable”, “experimental variable”, “responding variable”, “outcome variable”, and “output variable”.

“Explanatory variable” is preferred by some authors over “independent variable” when the quantities treated as “independent variables” may not be statistically independent. If the independent variable is referred to as an “explanatory variable” then the term “response variable” is preferred by some authors for the dependent variable.

“Explained variable” is preferred by some authors over “dependent variable” when the quantities treated as “dependent variables” may not be statistically dependent. If the dependent variable is referred to as an “explained variable” then the term “predictor variable” is preferred by some authors for the independent variable.

Variables may also be referred to by their form:

- continuous,

- binary/dichotomous,

- nominal categorical, and

- ordinal categorical, among others.

A variable may be thought to alter the dependent or independent variables, but may not actually be the focus of the experiment. So that variable will be kept constant or monitored to try to minimise its effect on the experiment. Such variables may be designated (指定) as either a “controlled variable”, “control variable”, or “extraneous variable”.

Extraneous variables, if included in a regression as independent variables, may aid a researcher with accurate response parameter estimation, prediction, and goodness of fit, but are not of substantive interest to the hypothesis under examination. For example, in a study examining the effect of post-secondary education on lifetime earnings, some extraneous variables might be gender, ethnicity, social class, genetics, intelligence, age, and so forth. A variable is extraneous only when it can be assumed (or shown) to influence the dependent variable. If included in a regression, it can improve the fit of the model. If it is excluded from the regression and if it has a non-zero covariance with one or more of the independent variables of interest, its omission will bias the regression’s result for the effect of that independent variable of interest. This effect is called confounding or omitted variable bias; in these situations, design changes and/or statistical control is necessary.

Extraneous variables are often classified into three types:

- Subject variables, which are the characteristics of the individuals being studied that might affect their actions. These variables include age, gender, health status, mood, background, etc.

- Blocking variables or experimental variables are characteristics of the persons conducting the experiment which might influence how a person behaves. Gender, the presence of racial discrimination, language, or other factors may qualify as such variables.

- Situational variables are features of the environment in which the study or research was conducted, which have a bearing on the outcome of the experiment in a negative way. Included are the air temperature, level of activity, lighting, and the time of day.

In quasi-experiments, differentiating between dependent and other variables may be downplayed in favour of differentiating between those variables that can be altered by the researcher and those that cannot.[citation needed] Variables in quasi-experiments may be referred to as “extraneous variables”, “subject variables”, “blocking variables”, “situational variables”, “pseudo-independent variables”, “ex post facto variables”, “natural group variables” or “non-manipulated variables”.

In modelling, variability that is not covered by the independent variable is designated by e_i and is known as the “residual”, “side effect”, “error”, “unexplained share”, “residual variable”, or “tolerance”.

refs and see also

- 一些蛋疼的名词

值得打印出来的“小抄”:Probability Cheatsheet

- Probability theory

@ Probability theory is the branch of mathematics concerned with probability, the analysis of random phenomena. The central objects of probability theory are random variables, stochastic processes, and events: mathematical abstractions of non-deterministic events or measured quantities that may either be single occurrences or evolve over time in an apparently random fashion.

Terminology (words)

@- RV: Random Varible

- CRV: Continuous Random Varaible

- DRV: Discrete Random Varaible

- CDF, joint CDF

- PMF, joint PMF

- PDF, joint PDF

- EV: expected value

- LOTUS: Law of the Unconscious Statistician

- Indicator Random Variables

- UoU: Universality of Uniform

- MGF: Moment Generating Functions

- CLT: Central Limit Theorem

- LLN: Law of Large Numbers

- RSS: Residual Sum of Squares

- OLS, ordinary least square

- LAD, Least absolute deviations, also known as least absolute errors (LAE),

- stochastic

[stə'kæstɪk]adj.【数】随机的;机会的;有可能性的;随便的, random error -> stochastic error.

- Mode, mean, median

@

Geometric visualisation of the mode, median and mean of an arbitrary probability density function.

才注意到这里 mean 表示成了一个天平一样的东西……

- Moment (mathematics)

@ In mathematics, a moment is a specific quantitative measure, used in both mechanics and statistics, of the shape of a set of points. If the points represent mass, then the zeroth moment is the total mass, the first moment divided by the total mass is the center of mass, and the second moment is the rotational inertia. If the points represent probability density, then the zeroth moment is the total probability (i.e. one), the first moment is the mean, the second central moment is the variance, the third moment is the skewness, and the fourth moment (with normalization and shift) is the kurtosis. The mathematical concept is closely related to the concept of moment in physics.

对于 bounded distribution,全部的矩决定了分布。

For a bounded distribution of mass or probability, the collection of all the moments (of all orders, from 0 to ∞) uniquely determines the distribution.

矩的定义(CRV)。 The n-th moment of a real-valued continuous function f(x) of a real variable about a value c is

\[\mu_n=\int_{-\infty}^\infty (x - c)^n\,f(x)\,dx.\]

For the second and higher moments, the central moments (moments about the mean, with c being the mean) are usually used rather than the moments about zero, because they provide clearer information about the distribution’s shape.

Other moments may also be defined. For example, the n-th inverse moment about zero is \(\operatorname{E}\left[X^{-n}\right]\) and the n-th logarithmic moment about zero is \(\operatorname{E}\left[\ln^n(X)\right]\).

Significance of moments (raw, central, standardised) and cumulants (累计误差) (raw, standardised), in connection with named properties of distributions:

mean

The first raw moment is the mean.

variance

The second central moment is the variance. Its positive square root is the standard deviation σ.

skewness

The third central moment is a measure of the lopsidedness (不平衡) of the distribution; any symmetric distribution will have a third central moment, if defined, of zero. The normalised third central moment is called the skewness, often γ (gamma).1

Kurtosis

[kɜː'təʊsɪs]n.【统】峭度, 峰度;峰态;峰度系数Normalised moments

The normalised n-th central moment or standardized moment is the n-th central moment divided by σn; the normalised n-th central moment of

\[x = \frac{\operatorname{E} \left [(x - \mu)^n \right ]}{\sigma^n}.\]

- Standard error

@ The standard error (SE) is the standard deviation of the sampling distribution of a statistic, most commonly of the mean. The term may also be used to refer to an estimate of that standard deviation, derived from a particular sample used to compute the estimate.

For a value that is sampled with an unbiased normally distributed error, the above depicts the proportion of samples that would fall between 0, 1, 2, and 3 standard deviations above and below the actual value.

For example, the sample mean is the usual estimator of a population mean. However, different samples drawn from that same population would in general have different values of the sample mean, so there is a distribution of sampled means (with its own mean and variance). The standard error of the mean (SEM) (i.e., of using the sample mean as a method of estimating the population mean) is the standard deviation of those sample means over all possible samples (of a given size) drawn from the population. Secondly, the standard error of the mean can refer to an estimate of that standard deviation, computed from the sample of data being analyzed at the time.

The standard error of the mean (SE or SEM) is the standard deviation of the sample-mean’s estimate of a population mean.

\[\text{SE}_\bar{x}\ = \frac{s}{\sqrt{n}}\]

where

- s is the sample standard deviation (i.e., the sample-based estimate of the standard deviation of the population), and

- n is the size (number of observations) of the sample

This estimate may be compared with the formula for the true standard deviation of the sample mean:

\[\text{SD}_\bar{x}\ = \frac{\sigma}{\sqrt{n}}\]

- Independent and identically distributed random variables

@ The abbreviation i.i.d. is particularly common in statistics (often as iid, sometimes written IID), where observations in a sample are often assumed to be effectively i.i.d. for the purposes of statistical inference. The assumption (or requirement) that observations be i.i.d. tends to simplify the underlying mathematics of many statistical methods (see mathematical statistics and statistical theory). However, in practical applications of statistical modeling the assumption may or may not be realistic. To test how realistic the assumption is on a given data set, the autocorrelation (自相关) can be computed, lag plots drawn or turning point test performed. The generalization of exchangeable random variables is often sufficient and more easily met.

White noise is a simple example of IID.

- Cumulative distribution function (CDF)

@

Every cumulative distribution function F is non-decreasing and right-continuous, which makes it a càdlàg function2. Furthermore,

\[\lim_{x\to -\infty}F(x)=0, \quad \lim_{x\to +\infty}F(x)=1.\]

- Probability density function (pdf)

@

If \(F_X\) is the CDF of \(X\), then:

\[F_X(x) = \int_{-\infty}^x f_X(u) \, du ,\]

and (if \(f_X\) is continuous at x)

\[f_X(x) = \frac{d}{dx} F_X(x).\]

- Probability mass function (pmf)

@

\[f_X(x) = \Pr(X = x) = \Pr(\{s \in S: X(s) = x\}).\]

Suppose that S is the sample space of all outcomes of a single toss of a fair coin, and X is the random variable defined on S assigning 0 to “tails” and 1 to “heads”. Since the coin is fair, the probability mass function is

\[f_X(x) = \begin{cases}\frac{1}{2}, &x \in \{0, 1\},\\0, &x \notin \{0, 1\}.\end{cases}\]

- Probability space

@ In probability theory, a probability space or a probability triple is a mathematical construct that models a real-world process (or “experiment”) consisting of states that occur randomly. A probability space is constructed with a specific kind of situation or experiment in mind. One proposes that each time a situation of that kind arises, the set of possible outcomes is the same and the probabilities are also the same.

A probability space consists of three parts:

- A sample space, \(\Omega\), which is the set of all possible outcomes.

- A set of events \(\mathcal{F}\), where each event is a set containing zero or more outcomes.

- The assignment of probabilities to the events; that is, a function P from events to probabilities.

A probability space is a mathematical triplet (\(\Omega\), \(\mathcal{F}\), P) that presents a model for a particular class of real-world situations. As with other models, its author ultimately defines which elements \(\Omega\), \(\mathcal{F}\), and P will contain.

- Expected value

@ Univariate discrete random variable, countable case

\[\operatorname{E}[X] = \sum_{i=1}^\infty x_i\, p_i,\]

Univariate continuous random variable

\[\operatorname{E}[X] = \int_{-\infty}^\infty x f(x)\, \mathrm{d}x.\]

General definition

In general, if X is a random variable defined on a probability space (Ω, Σ, P), then the expected value of X, denoted by \(E[X]\) (or \(〈X〉\), \(X\)), is defined as the Lebesgue integral

\[\operatorname{E} [X] = \int_\Omega X \, \mathrm{d}P = \int_\Omega X(\omega) P(\mathrm{d}\omega)\]

When this integral exists, it is defined as the expectation of X. Not all random variables have a finite expected value, since the integral may not converge absolutely; furthermore, for some it is not defined at all (e.g., Cauchy distribution). Two variables with the same probability distribution will have the same expected value, if it is defined.

Expectation of matrices

If X is an m × n matrix, then the expected value of the matrix is defined as the matrix of expected values:

\[ \begin{align} \operatorname{E}[X] &= \operatorname{E} \left [ \begin{pmatrix} x_{1,1} & x_{1,2} & \cdots & x_{1,n} \\ x_{2,1} & x_{2,2} & \cdots & x_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ x_{m,1} & x_{m,2} & \cdots & x_{m,n} \end{pmatrix} \right ] \\ &= \begin{pmatrix} \operatorname{E}[x_{1,1}] & \operatorname{E}[x_{1,2}] & \cdots & \operatorname{E}[x_{1,n}] \\ \operatorname{E}[x_{2,1}] & \operatorname{E}[x_{2,2}] & \cdots & \operatorname{E}[x_{2,n}] \\ \vdots & \vdots & \ddots & \vdots \\ \operatorname{E}[x_{m,1}] & \operatorname{E}[x_{m,2}] & \cdots & \operatorname{E}[x_{m,n}] \end{pmatrix} \end{align}. \]

This is utilized in covariance matrices.

- Law of the unconscious statistician

@ In probability theory and statistics, the law of the unconscious statistician (sometimes abbreviated LOTUS) is a theorem used to calculate the expected value of a function g(X) of a random variable X when one knows the probability distribution of X but one does not explicitly know the distribution of g(X).

好处是可以不用知道 Y 的分布,只要知道 X 的分布,以及 Y 关于 X 的函数,即可算出 Y 的期望。

TODO: Law of the unconscious statistician - Wikipedia, the free encyclopedia

- Variance

@ The variance of a random variable X is the expected value of the squared deviation from the mean μ = E[X]:

\[\operatorname{Var}(X) = \operatorname{E}\left[(X - \mu)^2 \right].\]

The variance can also be thought of as the covariance of a random variable with itself:

\[\operatorname{Var}(X) = \operatorname{Cov}(X, X).\]

Continuous random variable

\[\operatorname{Var}(X) =\sigma^2 =\int (x-\mu)^2 \, f(x) \, dx\, =\int x^2 \, f(x) \, dx\, - \mu^2\]

where \(\mu\) is the expected value,

\[\mu = \int x \, f(x) \, dx\,\]

Discrete random variable

If the generator of random variable X is discrete with probability mass function x1 ↦ p1, …, xn ↦ pn, then

\[\operatorname{Var}(X) = \sum_{i=1}^n p_i\cdot(x_i - \mu)^2,\]

or equivalently

\[\operatorname{Var}(X) = \sum_{i=1}^n p_i x_i ^2- \mu^2,\]

where \(\mu\) is the expected value, i.e.

\[\mu = \sum_{i=1}^n p_i\cdot x_i.\]

Sum of uncorrelated variables (Bienaymé formula)

随机变量的和的方差等于各随机变量的方差的和。

One reason for the use of the variance in preference to other measures of dispersion is that the variance of the sum (or the difference) of uncorrelated random variables is the sum of their variances:

\[\operatorname{Var}\Big(\sum_{i=1}^n X_i\Big) = \sum_{i=1}^n \operatorname{Var}(X_i).\]

This statement is called the Bienaymé formula (bienayme formula) and was discovered in 1853. It is often made with the stronger condition that the variables are independent, but being uncorrelated suffices. So if all the variables have the same variance σ2, then, since division by n is a linear transformation, this formula immediately implies that the variance of their mean is

\[\operatorname{Var}\left(\overline{X}\right) = \operatorname{Var}\left(\frac {1} {n}\sum_{i=1}^n X_i\right) = \frac {1} {n^2}\sum_{i=1}^n \operatorname{Var}\left(X_i\right) = \frac {\sigma^2} {n}.\]

That is, ** the variance of the mean decreases when n increases**. This formula for the variance of the mean is used in the definition of the standard error of the sample mean, which is used in the central limit theorem.

这样就可以用更多的数据来减少误差。

- Covariance

@ In probability theory and statistics, covariance is a measure of how much two random variables change together. If the greater values of one variable mainly correspond with the greater values of the other variable, and the same holds for the lesser values, i.e., the variables tend to show similar behavior, the covariance is positive.

A distinction must be made between

- the covariance of two random variables, which is a population parameter that can be seen as a property of the joint probability distribution, and

- the sample covariance, which serves as an estimated value of the parameter.

The covariance between two jointly distributed real-valued random variables X and Y with finite second moments is defined as

和方差的定义类似。

\[\operatorname{cov}(X,Y) = \operatorname{E}{\big[(X - \operatorname{E}[X])(Y - \operatorname{E}[Y])\big]},\]

where E[X] is the expected value of X, also known as the mean of X. By using the linearity property of expectations, this can be simplified to

\[ \begin{align} \operatorname{cov}(X,Y) &= \operatorname{E}\left[\left(X - \operatorname{E}\left[X\right]\right) \left(Y - \operatorname{E}\left[Y\right]\right)\right] \\ &= \operatorname{E}\left[X Y - X \operatorname{E}\left[Y\right] - \operatorname{E}\left[X\right] Y + \operatorname{E}\left[X\right] \operatorname{E}\left[Y\right]\right] \\ &= \operatorname{E}\left[X Y\right] - \operatorname{E}\left[X\right] \operatorname{E}\left[Y\right] - \operatorname{E}\left[X\right] \operatorname{E}\left[Y\right] + \operatorname{E}\left[X\right] \operatorname{E}\left[Y\right] \\ &= \operatorname{E}\left[X Y\right] - \operatorname{E}\left[X\right] \operatorname{E}\left[Y\right]. \end{align} \]

However, when \(\operatorname{E}[XY] \approx \operatorname{E}[X]\operatorname{E}[Y]\), this last equation is prone to catastrophic cancellation when computed with floating point arithmetic and thus should be avoided in computer programs when the data has not been centered before. Numerically stable algorithms should be preferred in this case.

For random vectors \(\mathbf{X} \in \mathbb{R}^m\) and \(\mathbf{Y} \in \mathbb{R}^n\), the m×n cross covariance matrix (also known as dispersion (

[dɪ'spɝʒn], 离差) matrix or variance–covariance matrix, or simply called covariance matrix) is equal to\[ \begin{align} \operatorname{cov}(\mathbf{X},\mathbf{Y}) &= \operatorname{E} \left[(\mathbf{X} - \operatorname{E}[\mathbf{X}]) (\mathbf{Y} - \operatorname{E}[\mathbf{Y}])^\mathrm{T}\right]\\ &= \operatorname{E}\left[\mathbf{X} \mathbf{Y}^\mathrm{T}\right] - \operatorname{E}[\mathbf{X}]\operatorname{E}[\mathbf{Y}]^\mathrm{T}, \end{align} \]

where \(m^T\) is the transpose of the vector (or matrix) m.

For a vector \(\mathbf{X}= \begin{bmatrix}X_1 & X_2 & \dots & X_m\end{bmatrix}^\mathrm{T}\) of m jointly distributed random variables with finite second moments, its covariance matrix is defined as

\[\Sigma(\mathbf{X}) = \sigma(\mathbf{X},\mathbf{X}).\]

- Classical definition

@ The probability of an event is the ratio of

- the number of cases favorable to it, to

- the number of all cases possible

when nothing leads us to expect that any one of these cases should occur more than any other, which renders them, for us, equally possible.

This definition is essentially a consequence of the principle of indifference. If elementary events are assigned equal probabilities, then the probability of a disjunction of elementary events is just the number of events in the disjunction divided by the total number of elementary events.

The classical definition of probability was called into question by several writers of the nineteenth century, including John Venn and George Boole. The frequentist definition of probability became widely accepted as a result of their criticism, and especially through the works of R.A. Fisher. The classical definition enjoyed a revival of sorts due to the general interest in Bayesian probability, because Bayesian methods require a prior probability distribution and the principle of indifference offers one source of such a distribution. Classical probability can offer prior probabilities that reflect ignorance which often seems appropriate before an experiment is conducted.

- Modern definition

@ (Discrete) The modern definition starts with a finite or countable set called the sample space, which relates to the set of all possible outcomes in classical sense, denoted by \(\Omega\). It is then assumed that for each element \(x \in \Omega\,\), an intrinsic “probability” value \(f(x)\,\) is attached, which satisfies the following properties:

- \(f(x)\in[0,1]\mbox{ for all }x\in \Omega\,\);

- \(\sum_{x\in \Omega} f(x) = 1\,\).

(Continuous) If the outcome space of a random variable X is the set of real numbers (\(\mathbb{R}\)) or a subset thereof, then a function called the cumulative distribution function (or cdf) \(F\,\) exists, defined by \(F(x) = P(X\le x) \,\). That is, \(F(x)\) returns the probability that X will be less than or equal to x.

The cdf necessarily satisfies the following properties.

- \(F\,\) is a monotonically non-decreasing, right-continuous function;

- \(\lim_{x\rightarrow -\infty} F(x)=0\,\);

- \(\lim_{x\rightarrow \infty} F(x)=1\,\).

- Classical probability distributions

@ 常见的经典的概率分布。

Certain random variables occur very often in probability theory because they well describe many natural or physical processes. Their distributions therefore have gained special importance in probability theory. Some fundamental discrete distributions are the discrete uniform, Bernoulli, binomial, negative binomial, Poisson and geometric distributions. Important continuous distributions include the continuous uniform, normal, exponential, gamma and beta distributions.

- Discrete Uniform distribution

@ The discrete uniform distribution itself is inherently non-parametric. It is convenient, however, to represent its values generally by an integer interval [a,b], so that a,b become the main parameters of the distribution (often one simply considers the interval [1,n] with the single parameter n). With these conventions, the cumulative distribution function (CDF) of the discrete uniform distribution can be expressed, for any k ∈ [a,b], as

\[F(k;a,b)=\frac{\lfloor k \rfloor -a + 1}{b-a+1}\]

pmf & CDF

pmf \(\frac{1}{n}\) CDF \(\frac{\lfloor k \rfloor -a+1}{n}\) Mean \(\frac{a+b}{2}\,\) Variance \(\frac{(b-a+1)^2-1}{12}\) - Bernoulli distribution 伯努利分布

@ If X is a random variable with this distribution, we have:

\[\Pr(X=1) = 1 - \Pr(X=0) = 1 - q = p.\!\]

The probability mass function f of this distribution, over possible outcomes k, is

\[f(k;p) = \begin{cases} p & \text{if }k=1, \\[6pt] 1-p & \text {if }k=0.\end{cases}\]

This can also be expressed as

\[f(k;p) = p^k (1-p)^{1-k}\!\quad \text{for }k\in\{0,1\}.\]

The Bernoulli distribution is a special case of the binomial distribution with n = 1.

Parameters 0<p<1, \(p\in\mathbb{R}\) Support \(k \in \{0,1\}\,\) pmf \(\begin{cases} q=(1-p) & \text{for }k=0 \\ p & \text{for }k=1 \end{cases}\) CDF \(\begin{cases} 0 & \text{for }k<0 \\ 1 - p & \text{for }0\leq k<1 \\ 1 & \text{for }k\geq 1 \end{cases}\) Mean \(p\,\) Median \(\begin{cases} 0 & \text{if } q > p\\ 0.5 & \text{if } q=p\\ 1 & \text{if } q<p \end{cases}\) Mode \(\begin{cases} 0 & \text{if } q > p\\ 0, 1 & \text{if } q=p\\ 1 & \text{if } q < p \end{cases}\) Variance \(p(1-p) (=pq)\,\) Skewness \(\frac{1-2p}{\sqrt{pq}}\) Ex. kurtosis \(\frac{1-6pq}{pq}\) Entropy \(-q\ln(q)-p\ln(p)\,\) MGF \(q+pe^t\,\) CF \(q+pe^{it}\,\) PGF \(q+pz\,\) Fisher information \(\frac{1}{p(1-p)}\) - Binomial distribution 二项分布

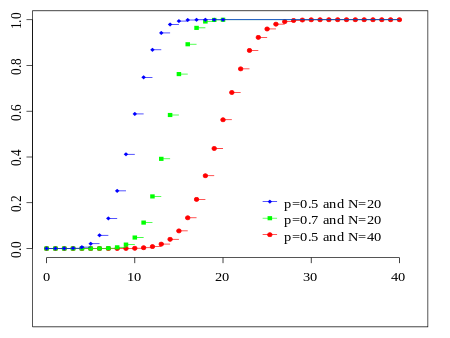

@ In probability theory and statistics, the binomial distribution with parameters n and p is the discrete probability distribution of the number of successes in a sequence of n independent yes/no experiments, each of which yields success with probability p. A success/failure experiment is also called a Bernoulli experiment or Bernoulli trial; when n = 1, the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the popular binomial test of statistical significance.

pdf & CDF

Parameters n ∈ N0 — number of trials, p ∈ [0,1] — success probability in each trial Support \(k ∈ { 0, …, n }\) — number of successes pmf \(\textstyle {n \choose k}\, p^k (1-p)^{n-k}\) CDF \(\textstyle I_{1-p}(n - k, 1 + k)\) Mean \(np\) Median \(\lfloor np \rfloor or \lceil np \rceil\) Mode \(\lfloor (n + 1)p \rfloor or \lceil (n + 1)p \rceil - 1\) Variance \(np(1 - p)\) Skewness \(\frac{1-2p}{\sqrt{np(1-p)}}\) Ex. kurtosis \(\frac{1-6p(1-p)}{np(1-p)}\) Entropy \(\frac12 \log_2 \big( 2\pi e\, np(1-p) \big) + O \left( \frac{1}{n} \right)\) in shannons. For nats, use the natural log in the log. MGF \((1-p + pe^t)^n \!\) CF \((1-p + pe^{it})^n \!\) PGF \(G(z) = \left[(1-p) + pz\right]^n.\) Fisher information \(g_n(p) = \frac{n}{p(1-p)}\) (for fixed n) - Poisson distribution 泊松分布

@ - Story

@ There are rare events (low probability events) that occur many different ways (high possibilities of occurences) at an average rate of λ occurrences per unit space or time. The number of events that occur in that unit of space or time is X.

Example A certain busy intersection (十字路口)has an average of 2 accidents per month. Since an accident is a low probability event that can happen many different ways, it is reasonable to model the number of accidents in a month at that intersection as Pois(2). Then the number of accidents that happen in two months at that intersection is distributed Pois(4).

速率是 λ, 在时间 n 内发生的次数用 X 来表示,那么 X ~ Poi(λ)

Poission 和泰勒级数是相关的。

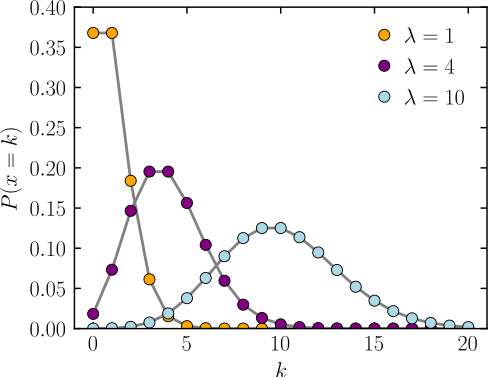

Poisson distribution (

/ˈpwɑːsɒn/), named after French mathematician Siméon Denis Poisson, is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time and/or space if these events occur with a known average rate and independently of the time since the last event. The Poisson distribution can also be used for the number of events in other specified intervals such as distance, area or volume.A discrete random variable X is said to have a Poisson distribution with parameter λ > 0, if, for k = 0, 1, 2, …, the probability mass function of X is given by:

\[\!f(k; \lambda)= \Pr(X = k)= \frac{\lambda^k e^{-\lambda}}{k!},\]

The positive real number λ is equal to the expected value of X and also to its variance

\[\lambda=\operatorname{E}(X)=\operatorname{Var}(X).\]

pmf: The horizontal axis is the index k, the number of occurrences. λ is the expected value. The function is defined only at integer values of k. The connecting lines are only guides for the eye.

CDF: The horizontal axis is the index k, the number of occurrences. The CDF is discontinuous at the integers of k and flat everywhere else because a variable that is Poisson distributed takes on only integer values.

Parameters \(λ > 0 (real)\) Support \(k ∈ ℤ*\) pmf \(\frac{\lambda^k e^{-\lambda}}{k!}\) CDF \(\frac{\Gamma(\lfloor k+1\rfloor, \lambda)}{\lfloor k\rfloor !}\), or \(e^{-\lambda} \sum_{i=0}^{\lfloor k\rfloor} \frac{\lambda^i}{i!}\), or \(Q(\lfloor k+1\rfloor,\lambda)\) (for \(k\ge 0\), where \(\Gamma(x, y)\) is the incomplete gamma function, \(\lfloor k\rfloor\) is the floor function, and Q is the regularized gamma function) Mean \(\lambda\) Median \(\approx\lfloor\lambda+1/3-0.02/\lambda\rfloor\) Mode \(\lceil\lambda\rceil - 1, \lfloor\lambda\rfloor\) Variance \(\lambda\) Skewness \(\lambda^{-1/2}\) Ex. kurtosis \(\lambda^{-1}\) Entropy \(\lambda[1 - \log(\lambda)] + e^{-\lambda}\sum_{k=0}^\infty \frac{\lambda^k\log(k!)}{k!} (for large \lambda)\) \(\frac{1}{2}\log(2 \pi e \lambda) - \frac{1}{12 \lambda} - \frac{1}{24 \lambda^2} - \qquad \frac{19}{360 \lambda^3} + O\left(\frac{1}{\lambda^4}\right)\) MGF \(\exp(\lambda (e^{t} - 1))\) CF \(\exp(\lambda (e^{it} - 1))\) PGF \(\exp(\lambda(z - 1))\) Fisher information \(\lambda^{-1}\) - Story

- Compound Poisson distribution

@ In probability theory, a compound Poisson distribution is the probability distribution of the sum of a number of independent identically-distributed random variables, where the number of terms to be added is itself a Poisson-distributed variable. In the simplest cases, the result can be either a continuous or a discrete distribution.

- Definition

@ Suppose that

\[N\sim\operatorname{Poisson}(\lambda),\]

i.e., N is a random variable whose distribution is a Poisson distribution with expected value λ, and that

\[X_1, X_2, X_3, \dots\]

are identically distributed random variables that are mutually independent and also independent of N. Then the probability distribution of the sum of N i.i.d. random variables conditioned on the number of these variables (N):

\[Y \mid N=\sum_{n=1}^N X_n\]

has a well-defined distribution. In the case N = 0, then the value of Y is 0, so that then Y | N = 0 has a degenerate distribution.

The compound Poisson distribution is obtained by marginalising the joint distribution of (Y,N) over N, where this joint distribution is obtained by combining the conditional distribution Y | N with the marginal distribution of N.

- Properties

Mean and variance of the compound distribution derive in a simple way from law of total expectation and the law of total variance. Thus

TODO: Compound Poisson distribution - Wikipedia, the free encyclopedia

- Definition

- Law of total expectation

@ The proposition in probability theory known as the law of total expectation, the law of iterated expectations, the tower rule, the smoothing theorem, and Adam’s Law among other names, states that if X is an integrable random variable (i.e., a random variable satisfying E( | X | ) < ∞) and Y is any random variable, not necessarily integrable, on the same probability space, then

\[\operatorname{E} (X) = \operatorname{E} ( \operatorname{E} ( X \mid Y)),\]

i.e., the expected value of the conditional expected value of X given Y is the same as the expected value of X.

The conditional expected value E( X | Y ) is a random variable in its own right, whose value depends on the value of Y. Notice that the conditional expected value of X given the event Y = y is a function of y. If we write E( X | Y = y) = g(y) then the random variable E( X | Y ) is just g(Y).

One special case states that if \(A_1, A_2, \ldots, A_n\) is a partition of the whole outcome space, i.e. these events are mutually exclusive and exhaustive, then

\[\operatorname{E} (X) = \sum_{i=1}^{n}{\operatorname{E}(X \mid A_i) \operatorname{P}(A_i)}.\]

TODO: Law of total expectation - Wikipedia, the free encyclopedia

- Law of total variance

@ In probability theory, the law of total variance or variance decomposition formula, also known as Eve’s law, states that if X and Y are random variables on the same probability space, and the variance of Y is finite, then

\[\operatorname{Var}[Y]=\operatorname{E}(\operatorname{Var}[Y\mid X])+\operatorname{Var}(\operatorname{E}[Y\mid X]).\,\]

不仅概率可以分块加,variance 也可以。

TODO: Law of total variance - Wikipedia, the free encyclopedia

- Poisson approximation

@ the Poisson distribution with parameter λ = np can be used as an approximation to B(n, p) of the binomial distribution if n is sufficiently large and p is sufficiently small.

Limiting distributions

Poisson limit theorem: As n approaches ∞ and p approaches 0, then the Binomial(n, p) distribution approaches the Poisson distribution with expected value λ

de Moivre–Laplace theorem: As n approaches ∞ while p remains fixed, the distribution of \[\frac{X-np}{\sqrt{np(1-p)}}\]

approaches the normal distribution with expected value 0 and variance 1. This result is sometimes loosely stated by saying that the distribution of X is asymptotically normal with expected value np and variance np(1 − p). This result is a specific case of the central limit theorem.

这个看成 normalize 就可以了。Xnormalized = (X - mean)/variance

- Poisson limit theorem

@ The law of rare events or Poisson limit theorem gives a Poisson approximation to the binomial distribution, under certain conditions. The theorem was named after Siméon Denis Poisson (1781–1840).

If \(n \rightarrow \infty, p \rightarrow 0, \text{such that } np \rightarrow \lambda\), then

\[\frac{n!}{(n-k)!k!} p^k (1-p)^{n-k} \rightarrow e^{-\lambda}\frac{\lambda^k}{k!}.\]

- Negative binomial distribution

@ Different texts adopt slightly different definitions for the negative binomial distribution. They can be distinguished by whether the support starts at k = 0 or at k = r, whether p denotes the probability of a success or of a failure, and whether r represents success or failure, so it is crucial to identify the specific parametrization used in any given text.

Notation \(\mathrm{NB}(r,\,p)\) Parameters r > 0 — number of failures until the experiment is stopped, p ∈ (0,1) — success probability in each experiment (real) Support k ∈ { 0, 1, 2, 3, … } — number of successes pmf \({k+r-1 \choose k}\cdot (1-p)^r p^k,\!\) involving a binomial coefficient3 CDF \(1-I_p(k+1,\,r)\), the regularized incomplete beta function Mean \(\frac{pr}{1-p}\) Mode \(\begin{cases}\big\lfloor\frac{p(r-1)}{1-p}\big\rfloor & \text{if}\ r>1 \\ 0 & \text{if}\ r\leq 1\end{cases}\) Variance \(\frac{pr}{(1-p)^2}\) Skewness \(\frac{1+p}{\sqrt{pr}}\) Suppose there is a sequence of independent Bernoulli trials. Thus, each trial has two potential outcomes called “success” and “failure”. In each trial the probability of success is p and of failure is (1 − p). We are observing this sequence until a predefined number r of failures has occurred. Then the random number of successes we have seen, X, will have the negative binomial (or Pascal) distribution:

\[X\sim\operatorname{NB}(r; p)\]

- Geometric distribution

@ - Story

@ X is the number of “failures” that we will achieve before we achieve our first success. Our successes have probability p.

Example If each pokeball we throw has probability 1/10 to catch Mew, the number of failed pokeballs will be distributed Geom( 1/10 ).

These two different geometric distributions should not be confused with each other. Often, the name shifted geometric distribution is adopted for the former one (distribution of the number X); however, to avoid ambiguity, it is considered wise to indicate which is intended, by mentioning the support explicitly.

It’s the probability that the first occurrence of success requires k number of independent trials, each with success probability p. If the probability of success on each trial is p, then the probability that the kth trial (out of k trials) is the first success is

\[\Pr(X = k) = (1-p)^{k-1}\,p\,\]

for k = 1, 2, 3, ….

The above form of geometric distribution is used for modeling the number of trials up to and including the first success. By contrast, the following form of the geometric distribution is used for modeling the number of failures until the first success:

\[\Pr(Y=k) = (1 - p)^k\,p\,\]

for k = 0, 1, 2, 3, ….

pmf & CDF

Parameters 0< p \(\leq\) 1 success probability (real) 0< p \(\leq\) 1 success probability (real) Support k trials where \(k \in \{1,2,3,\dots\}\!\) k failures where \(k \in \{0,1,2,3,\dots\}\!\) pmf \((1 - p)^{k-1}\,p\!\) \((1 - p)^{k}\,p\!\) CDF \(1-(1 - p)^k\!\) \(1-(1 - p)^{k+1}\!\) Mean \(\frac{1}{p}\!\) \(\frac{1-p}{p}\!\) Median \(\left\lceil \frac{-1}{\log_2(1-p)} \right\rceil\!\) \(\left\lceil \frac{-1}{\log_2(1-p)} \right\rceil\! - 1\) Mode 1 0 Variance \(\frac{1-p}{p^2}\!\) \(\frac{1-p}{p^2}\!\) Skewness \(\frac{2-p}{\sqrt{1-p}}\!\) \(\frac{2-p}{\sqrt{1-p}}\!\) Excess kurtosis \(6+\frac{p^2}{1-p}\!\) \(6+\frac{p^2}{1-p}\!\) Entropy \(\tfrac{-(1-p)\log_2 (1-p) - p \log_2 p}{p}\!\) \(\tfrac{-(1-p)\log_2 (1-p) - p \log_2 p}{p}\!\) mgf \(\frac{pe^t}{1-(1-p) e^t}\!, for t<-\ln(1-p)\!\) \(\frac{p}{1-(1-p)e^t}\!\) Characteristic function \(\frac{pe^{it}}{1-(1-p)\,e^{it}}\!\) \(\frac{p}{1-(1-p)\,e^{it}}\!\) The expected value of a geometrically distributed random variable X is 1/p and the variance is (1 − p)/p2:

\[\mathrm{E}(X) = \frac{1}{p}, \qquad\mathrm{var}(X) = \frac{1-p}{p^2}.\]

- Story

- Hypergeometric distribution

@ A random variable X follows the hypergeometric distribution if its probability mass function (pmf) is given by

\[ P(X = k) = \frac{\binom{K}{k} \binom{N - K}{n-k}}{\binom{N}{n}}, \]

where

- N is the population size,

- K is the number of success states in the population,

- n is the number of draws,

- k is the number of observed successes,

- \(\textstyle {a \choose b}\) is a binomial coefficient.

- Continuous Uniform distribution

@ In probability theory and statistics, the continuous uniform distribution or rectangular distribution is a family of symmetric probability distributions such that for each member of the family, all intervals of the same length on the distribution’s support are equally probable. The support is defined by the two parameters, a and b, which are its minimum and maximum values. The distribution is often abbreviated U(a,b). It is the maximum entropy probability distribution for a random variate X under no constraint other than that it is contained in the distribution’s support.

Notation \(\mathcal{U}(a, b)\) or \(\mathrm{unif}(a,b)\) Parameters \(-\infty < a < b < \infty \,\) Support \(x \in [a,b]\) PDF \(\begin{cases} \frac{1}{b - a} & \text{for } x \in [a,b] \\ 0 & \text{otherwise} \end{cases}\) CDF \(\begin{cases} 0 & \text{for } x < a \\ \frac{x-a}{b-a} & \text{for } x \in [a,b) \\ 1 & \text{for } x \ge b \end{cases}\) Mean \(\tfrac{1}{2}(a+b)\) Median \(\tfrac{1}{2}(a+b)\) Mode any value in (a,b) Variance \(\tfrac{1}{12}(b-a)^2\) Skewness 0 Ex. kurtosis \(-\tfrac{6}{5}\) Entropy \(\log(b-a) \,\) MGF \(\begin{cases} \frac{\mathrm{e}^{tb}-\mathrm{e}^{ta}}{t(b-a)} &\text{for } t \neq 0 \\ 1 &\text{for } t = 0 \end{cases}\) CF \(\frac{\mathrm{e}^{itb}-\mathrm{e}^{ita}}{it(b-a)}\) - Error function

@

Plot of the error function

(erf: a sigmoid)

In mathematics, the error function (also called the Gauss error function) is a special function (non-elementary) of sigmoid shape that occurs in probability, statistics, and partial differential equations describing diffusion. It is defined as:

\[\operatorname{erf}(x) = \frac{2}{\sqrt\pi}\int_0^x e^{-t^2}\,\mathrm dt.\]

The complementary error function, denoted erfc, is defined as

\[\begin{align} \operatorname{erfc}(x) & = 1-\operatorname{erf}(x) \\ & = \frac{2}{\sqrt\pi} \int_x^{\infty} e^{-t^2}\,\mathrm dt \\ & = e^{-x^2} \operatorname{erfcx}(x), \end{align}\]

which also defines erfcx, the scaled complementary error function (which can be used instead of erfc to avoid arithmetic underflow). Another form of \(\operatorname{erfc}(x)\) is known as Craig’s formula:

\[\begin{align} \operatorname{erfc}(x) & = \frac{2}{\pi} \int_0^{\pi/2} \exp \left( - \frac{x^2}{\sin^2 \theta} \right) d\theta. \end{align}\]

The error function is related to the cumulative distribution , the integral of the standard normal distribution, by

\[\Phi (x) = \frac{1}{2}+ \frac{1}{2} \operatorname{erf} \left(x/ \sqrt{2}\right) = \frac{1}{2} \operatorname{erfc} \left(-x/ \sqrt{2}\right).\]

- Q-function

@

A plot of the Q-function.

In statistics, the Q-function is the tail probability of the standard normal distribution (x). In other words, Q(x) is the probability that a normal (Gaussian) random variable will obtain a value larger than x standard deviations above the mean.

If the underlying random variable is y, then the proper argument to the tail probability is derived as:

\[x=\frac{y - \mu}{\sigma}\]

which expresses the number of standard deviations away from the mean.

Because of its relation to the cumulative distribution function of the normal distribution, the Q-function can also be expressed in terms of the error function, which is an important function in applied mathematics and physics.

Formally, the Q-function is defined as

\[Q(x) = \frac{1}{\sqrt{2\pi}} \int_x^\infty \exp\left(-\frac{u^2}{2}\right) \, du.\]

Thus,

\[Q(x) = 1 - Q(-x) = 1 - \Phi(x)\,\!,\]

where \(\Phi(x)\) is the cumulative distribution function of the normal Gaussian distribution.

- Normal distribution

@ (also, Z distribution)

The probability density of the normal distribution is:

\[f(x \; | \; \mu, \sigma^2) = \frac{1}{\sigma\sqrt{2\pi} } \; e^{ -\frac{(x-\mu)^2}{2\sigma^2} }\]

Where:

- \(\mu\) is mean or expectation of the distribution (and also its median and mode).

- \(\sigma\) is standard deviation

- \(\sigma^2\) is variance

pdf & CDF

Notation \(\mathcal{N}(\mu,\,\sigma^2)\) Parameters μ ∈ R — mean (location), σ2 > 0 — variance (squared scale) Support \(x ∈ R\) PDF \(\frac{1}{\sigma\sqrt{2\pi}}\, e^{-\frac{(x - \mu)^2}{2 \sigma^2}}\) CDF \(\frac12\left[1 + \operatorname{erf}\left( \frac{x-\mu}{\sigma\sqrt{2}}\right)\right]\) Quantile \(\mu+\sigma\sqrt{2}\,\operatorname{erf}^{-1}(2F-1)\) Mean μ Median μ Mode μ Variance \(\sigma^2\,\) Skewness 0 Ex. kurtosis 0 Entropy \(\tfrac12 \ln(2\pi\,e\,\sigma^2)\) MGF \(\exp\{ \mu t + \frac{1}{2}\sigma^2t^2 \}\) CF \(\exp \{ i\mu t - \frac{1}{2}\sigma^2 t^2 \}\) Fisher information \(\begin{pmatrix}1/\sigma^2&0\\0&1/(2\sigma^4)\end{pmatrix}\) - Standard normal distribution

\[\phi(x) = \frac{e^{- \frac{\scriptscriptstyle 1}{\scriptscriptstyle 2} x^2}}{\sqrt{2\pi}}\,\]

- Standard deviation and tolerance intervals

@ For the normal distribution, the values less than one standard deviation away from the mean account for 68.27% of the set; while two standard deviations from the mean account for 95.45%; and three standard deviations account for 99.73%.

the probability that a normal deviate lies in the range μ − nσ and μ + nσ is given by

\[F(\mu+n\sigma) - F(\mu-n\sigma) = \Phi(n)-\Phi(-n) = \mathrm{erf}\left(\frac{n}{\sqrt{2}}\right),\]

- Cumulative distribution function

@ The cumulative distribution function (CDF) of the standard normal distribution, usually denoted with the capital Greek letter (phi), is the integral

\[\Phi(x)\; = \;\frac{1}{\sqrt{2\pi}} \int_{-\infty}^x e^{-t^2/2} \, dt\]

In statistics one often uses the related error function, or erf(x), defined as the probability of a random variable with normal distribution of mean 0 and variance 1/2 falling in the range [-x, x]; that is

\[\operatorname{erf}(x)\; =\; \frac{1}{\sqrt{\pi}} \int_{-x}^x e^{-t^2} \, dt\]

These integrals cannot be expressed in terms of elementary functions, and are often said to be special functions. However, many numerical approximations are known; see below.

正太分布的 CDF 和 erf 很像。满足如下关系。

The two functions are closely related, namely

\[\Phi(x)\; =\; \frac12\left[1 + \operatorname{erf}\left(\frac{x}{\sqrt{2}}\right)\right]\]

For a generic normal distribution f with mean μ and deviation σ, the cumulative distribution function is

\[F(x)\;=\;\Phi\left(\frac{x-\mu}{\sigma}\right)\;=\; \frac12\left[1 + \operatorname{erf}\left(\frac{x-\mu}{\sigma\sqrt{2}}\right)\right]\]

- Standard score

@ Z-分数,所以正太分布也叫 z 分布。标准分布,在考试测验评分里常用。

Standard scores are also called z-values, z-scores, normal scores, and standardized variables; the use of “Z” is because the normal distribution is also known as the “Z distribution”. They are most frequently used to compare a sample to a standard normal deviate, though they can be defined without assumptions of normality.

Compares the various grading methods in a normal distribution. Includes: Standard deviations, cumulative percentages, percentile equivalents, Z-scores, T-scores, standard nine, percent in stanine

The standard score of a raw score x is

\[z = {x- \mu \over \sigma}\]

In mathematical statistics, a random variable X is standardized by subtracting its expected value \(\operatorname{E}[X]\) and dividing the difference by its standard deviation \(\sigma(X) = \sqrt{\operatorname{Var}(X)}\):

\[Z = {X - \operatorname{E}[X] \over \sigma(X)}\]

- Stanine

标准九。

Stanine (STAndard NINE) is a method of scaling test scores on a nine-point standard scale with a mean of five and a standard deviation of two.

Calculating Stanines

Result Ranking 4% 7% 12% 17% 20% 17% 12% 7% 4% Stanine 1 2 3 4 5 6 7 8 9 Standard score below -1.75 -1.75 to -1.25 -1.25 to -.75 -.75 to -.25 -.25 to +.25 +.25 to +.75 +.75 to +1.25 +1.25 to +1.75 above +1.75 Wechsler scale score below 74 74 to 81 81 to 89 89 to 96 96 to 104 104 to 111 111 to 119 119 to 126 above 126 Today stanines are mostly used in educational assessment.

- t-statistic

@ notional,

['noʊʃən(ə)l]adj.猜测的;估计的;理论上的;想象的用于假设检验。

In statistics, the t-statistic is a ratio of the departure of an estimated parameter from its notional value and its standard error. It is used in hypothesis testing, for example in the Student’s t-test, in the augmented Dickey–Fuller test, and in bootstrapping.

Let \(\scriptstyle\hat\beta\) be an estimator of parameter β in some statistical model. Then a t-statistic for this parameter is any quantity of the form

\[t_{\hat{\beta}} = \frac{\hat\beta - \beta_0}{\mathrm{s.e.}(\hat\beta)}\]

where β0 is a non-random, known constant, and \(\scriptstyle s.e.(\hat\beta)\) is the standard error of the estimator \(\scriptstyle\hat\beta\). By default, statistical packages report t-statistic with β0 = 0 (these t-statistics are used to test the significance of corresponding regressor). However, when t-statistic is needed to test the hypothesis of the form H0: β = β0, then a non-zero β0 may be used.

If \(\scriptstyle\hat\beta\) is an ordinary least squares estimator in the classical linear regression model (that is, with normally distributed and homoskedastic error terms), and if the true value of parameter β is equal to β0, then the sampling distribution of the t-statistic is the Student’s t-distribution with (n − k) degrees of freedom, where n is the number of observations, and k is the number of regressors (including the intercept).

In the majority of models the estimator is consistent for β and distributed asymptotically normally. If the true value of parameter β is equal to β0 and the quantity \(\scriptstyle s.e.(\hat\beta)\) correctly estimates the asymptotic variance of this estimator, then the t-statistic will have asymptotically the standard normal distribution.

In some models the distribution of t-statistic is different from normal, even asymptotically. For example, when a time series with unit root is regressed in the augmented Dickey–Fuller test, the test t-statistic will asymptotically have one of the Dickey–Fuller distributions (depending on the test setting).

- Exponential distribution

@ - Story

@ You’re sitting on an open meadow right before the break of dawn, wishing that airplanes in the night sky were shooting stars, because you could really use a wish right now. You know that shooting stars come on average every 15 minutes, but a shooting star is not “due” to come just because you’ve waited so long. Your waiting time is memoryless; the additional time until the next shooting star comes does not depend on how long you’ve waited already.

Example The waiting time until the next shooting star is distributed Expo(4) hours. Here λ = 4 is the rate parameter, since shooting stars arrive at a rate of 1 per 1/4 hour on average. The expected time until the next shooting star is 1/λ = 1/4 hour.

Expo(λ),λ 是单位时间流星的个数,1/λ 是你等到下一个流星需要等待的时间(的期望)。

The exponential distribution (a.k.a. negative exponential distribution) is the probability distribution that describes the time between events in a Poisson process, i.e. a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson processes, it is found in various other contexts.

The exponential distribution is not the same as the class of exponential families of distributions, which is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes the normal distribution, binomial distribution, gamma distribution, Poisson, and many others.

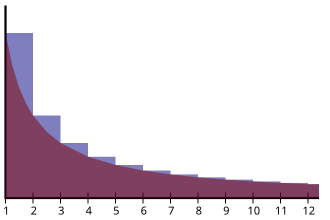

pdf & CDF

The probability density function (pdf) of an exponential distribution is

\[ f(x;\lambda) = \begin{cases} \lambda e^{-\lambda x} & x \ge 0, \\ 0 & x < 0. \end{cases} \]

Alternatively, this can be defined using the right-continuous Heaviside step function, H(x) where H(0)=1:

\[ f(x;\lambda) = \mathrm \lambda e^{-\lambda x} H(x) \]

- Heaviside step function - Wikipedia, the free encyclopedia

@ The Heaviside (

[ˈhevisaid]) step function, or the unit step function, usually denoted by θ (but sometimes u or 𝟙), is a discontinuous function whose value is zero for negative argument and one for positive argument. It is an example of the general class of step functions, all of which can be represented as linear combinations of translations of this one.

The Heaviside step function, using the half-maximum convention

The simplest definition of the Heaviside function is as the derivative of the ramp function:

\[H(x) := \frac{d}{dx} \max \{ x, 0 \}\]

The Heaviside function can also be defined as the integral of the Dirac delta function: H′ = δ. This is sometimes written as

\[H(x) := \int_{-\infty}^x { \delta(s)} \, \mathrm{d}s\]

Parameters λ > 0 rate, or inverse scale Support \(x ∈ [0, ∞)\) PDF \(λ e−λx\) CDF \(1 − e−λx\) Quantile \(-ln(1-F)/λ\) Mean \(λ−1 (=β)\) Median \(λ−1 ln(2)\) Mode 0 Variance \(λ−2 (=β2)\) Skewness 2 Ex. kurtosis 6 Entropy \(log(e/λ)\) MGF \(\frac{\lambda}{\lambda-t}, \text{ for } t < \lambda\) CF \(\frac{\lambda}{\lambda-it}\) Fisher information $^{-2} $ - Story

- Gamma distribution

@ - Story

@ You sit waiting for shooting stars, where the waiting time for a star is distributed Expo(λ). You want to see n shooting stars before you go home. The total waiting time for the nth shooting star is Gamma(n,λ).

Example You are at a bank, and there are 3 people ahead of you. The serving time for each person is Exponential with mean 2 minutes. Only one person at a time can be served. The distribution of your waiting time until it’s your turn to be served is Gamma(3, 1/2 ).

还是等流星,Expo(λ),意思是单位时间可以有 λ 个流星,1/λ 的时间预期可以等到一个。你想看到 n 个流星再回家,那么你的等待时间满足 Gamma(n,λ) 分布。排队也是一样的。

(但是感觉这两种不太一样,因为流星之间是无关的,而排队的人是有序的。)

In probability theory and statistics, the gamma distribution is a two-parameter family of continuous probability distributions. The common exponential distribution and chi-squared distribution are special cases of the gamma distribution. There are three different parametrizations in common use:

- With a shape parameter k and a scale parameter θ.

- With a shape parameter α = k and an inverse scale parameter β = 1/θ, called a rate parameter.

- With a shape parameter k and a mean parameter μ = k/β.

pdf & CDF

Parameters k > 0 shape, θ > 0 scale α > 0 shape, β > 0 rate Support \(\scriptstyle x \;\in\; (0,\, \infty)\) \(\scriptstyle x \;\in\; (0,\, \infty)\) pdf \(\frac{1}{\Gamma(k) \theta^k} x^{k \,-\, 1} e^{-\frac{x}{\theta}}\) \(\frac{\beta^\alpha}{\Gamma(\alpha)} x^{\alpha \,-\, 1} e^{- \beta x }\) CDF \(\frac{1}{\Gamma(k)} \gamma\left(k,\, \frac{x}{\theta}\right)\) \(\frac{1}{\Gamma(\alpha)} \gamma(\alpha,\, \beta x)\) Mean \(\scriptstyle \mathbf{E}[ X] = k \theta\) \(\scriptstyle\mathbf{E}[ X] = \frac{\alpha}{\beta}\) \(\scriptstyle \mathbf{E}[\ln X] = \psi(k) +\ln(\theta)\) \(\scriptstyle \mathbf{E}[\ln X] = \psi(\alpha) -\ln(\beta)\) Median No simple closed form No simple closed form Mode \(\scriptstyle (k \,-\, 1)\theta \text{ for } k \;{\geq}\; 1\) \(\scriptstyle \frac{\alpha \,-\, 1}{\beta} \text{ for } \alpha \;{\geq}\; 1\) Variance \(\scriptstyle\operatorname{Var}[ X] = k \theta^2\) \(\scriptstyle \operatorname{Var}[ X] = \frac{\alpha}{\beta^2}\) \(\scriptstyle\operatorname{Var}[\ln X] = \psi_1(k)\) \(\scriptstyle\operatorname{Var}[\ln X] = \psi_1(\alpha)\) Skewness \(\scriptstyle \frac{2}{\sqrt{k}}\) \(\scriptstyle \frac{2}{\sqrt{\alpha}}\) Excess kurtosis \(\scriptstyle \frac{6}{k}\) \(\scriptstyle \frac{6}{\alpha}\) - Story

- Beta distribution

@ In probability theory and statistics, the beta distribution is a family of continuous probability distributions defined on the interval

[0, 1]parametrized by two positive shape parameters, denoted by α and β, that appear as exponents of the random variable and control the shape of the distribution.The beta distribution has been applied to model the behavior of random variables limited to intervals of finite length in a wide variety of disciplines. For example, it has been used as a statistical description of allele frequencies in population genetics; time allocation in project management / control systems; sunshine data; variability of soil properties; proportions of the minerals in rocks in stratigraphy; and heterogeneity in the probability of HIV transmission.

In Bayesian inference, the beta distribution is the conjugate prior probability distribution for the Bernoulli, binomial, negative binomial and geometric distributions. For example, the beta distribution can be used in Bayesian analysis to describe initial knowledge concerning probability of success such as the probability that a space vehicle will successfully complete a specified mission. The beta distribution is a suitable model for the random behavior of percentages and proportions.

The usual formulation of the beta distribution is also known as the beta distribution of the first kind, whereas beta distribution of the second kind is an alternative name for the beta prime distribution.

pdf & CDF

Notation Beta(α, β) Parameters α > 0 shape (real), β > 0 shape (real) Support \(x \in (0, 1)\!\) PDF \(\frac{x^{\alpha-1}(1-x)^{\beta-1}} {\operatorname{Beta}(\alpha,\beta)}\!\) where \(\operatorname{Beta}(\alpha,\beta) = \frac{\Gamma(\alpha)\Gamma(\beta)}{\Gamma(\alpha + \beta)}\) CDF \(I_x(\alpha,\beta)\!\) Mean \(\operatorname{E}[X] = \frac{\alpha}{\alpha+\beta}\!\), \(\operatorname{E}[\ln X] = \psi(\alpha) - \psi(\alpha + \beta)\!\) Median \(\begin{matrix}I_{\frac{1}{2}}^{[-1]}(\alpha,\beta)\text{ (in general) }\\[0.5em] \approx \frac{ \alpha - \tfrac{1}{3} }{ \alpha + \beta - \tfrac{2}{3} }\text{ for }\alpha, \beta >1\end{matrix}\) Mode \(\frac{\alpha-1}{\alpha+\beta-2}\!\) for α, β >1 Variance \(\operatorname{var}[X] = \frac{\alpha\beta}{(\alpha+\beta)^2(\alpha+\beta+1)}\!\), \(\operatorname{var}[\ln X] = \psi_1(\alpha) - \psi_1(\alpha + \beta)\!\) Skewness \(\frac{2\,(\beta-\alpha)\sqrt{\alpha+\beta+1}}{(\alpha+\beta+2)\sqrt{\alpha\beta}}\) Ex. kurtosis \(\frac{6[(\alpha - \beta)^2 (\alpha +\beta + 1) - \alpha \beta (\alpha + \beta + 2)]}{\alpha \beta (\alpha + \beta + 2) (\alpha + \beta + 3)}\) Entropy \(\begin{matrix}\ln\operatorname{Beta}(\alpha,\beta)-(\alpha-1)\psi(\alpha)-(\beta-1)\psi(\beta)\\[0.5em] +(\alpha+\beta-2)\psi(\alpha+\beta)\end{matrix}\) MGF \(1 +\sum_{k=1}^{\infty} \left( \prod_{r=0}^{k-1} \frac{\alpha+r}{\alpha+\beta+r} \right) \frac{t^k}{k!}\) CF \({}_1F_1(\alpha; \alpha+\beta; i\,t)\!\) Fisher information \(\begin{matrix}\\ \operatorname{var}[\ln X] &\operatorname{cov}[\ln X, \ln(1-X)] \\ \operatorname{cov}[\ln X, \ln(1-X)] & \operatorname{var}[\ln (1-X)]\end{matrix}\) - Tolerance interval

@ A tolerance interval is a statistical interval within which, with some confidence level, a specified proportion of a sampled population falls.

“More specifically, a 100×p%/100×(1−α) tolerance interval provides limits within which at least a certain proportion (p) of the population falls with a given level of confidence (1−α).”

“A (p, 1−α) tolerance interval (TI) based on a sample is constructed so that it would include at least a proportion p of the sampled population with confidence 1−α; such a TI is usually referred to as p-content −(1−α) coverage TI.”

“A (p, 1−α) upper tolerance limit (TL) is simply an 1−αupper confidence limit for the 100 p percentile of the population.”

A tolerance interval can be seen as a statistical version of a probability interval. “In the parameters-known case, a 95% tolerance interval and a 95% prediction interval are the same.” If we knew a population’s exact parameters, we would be able to compute a range within which a certain proportion of the population falls. For example, if we know a population is normally distributed with mean and standard deviation , then the interval 1.96includes 95% of the population (1.96 is the z-score for 95% coverage of a normally distributed population).

- Gamma function

@ In mathematics, the gamma function (represented by the capital Greek letter Γ) is an extension of the factorial function, with its argument shifted down by 1, to real and complex numbers. That is, if n is a positive integer:

\[\Gamma(n) = (n-1)!.\]

The gamma function is defined for all complex numbers except the non-positive integers. For complex numbers with a positive real part, it is defined via a convergent improper integral:

\[\Gamma(t) = \int_0^\infty x^{t-1} e^{-x}\,dx.\]

This integral function is extended by analytic continuation to all complex numbers except the non-positive integers (where the function has simple poles), yielding the meromorphic (

[,merə'mɔːfɪk], 亚纯的) function we call the gamma function. In fact the gamma function corresponds to the Mellin transform of the negative exponential function:\[\Gamma(t) = \{ \mathcal M e^{-x} \} (t).\]

The gamma function is meromorphic in the whole complex plane.

The gamma function is a component in various probability-distribution functions, and as such it is applicable in the fields of probability and statistics, as well as combinatorics.

It is easy graphically to interpolate the factorial function to non-integer values, but is there a formula that describes the resulting curve?

Properties

- \(\Gamma(t+1)=t \Gamma(t)\,\), Γ(t) = Γ(t + 1)/t, \(\Gamma(t)=\frac{\Gamma(t+n)}{t(t+1)\cdots(t+n-1)},\)

- \(\Gamma(n) = 1 \cdot 2 \cdot 3 \cdots (n-1) = (n-1)!\,\)

- \(\Gamma\left(\tfrac{1}{2}\right)=\sqrt{\pi},\)

- \(\Gamma\left(\tfrac{1}{2}+n\right) = {(2n)! \over 4^n n!} \sqrt{\pi} = \frac{(2n-1)!!}{2^n} \sqrt{\pi} = \sqrt{\pi} \left[ {n-\frac{1}{2}\choose n} n! \right]\)

- \(\Gamma\left(\tfrac{1}{2}-n\right) = {(-4)^n n! \over (2n)!} \sqrt{\pi} = \frac{(-2)^n}{(2n-1)!!} \sqrt{\pi} = \frac{\sqrt{\pi}}{{-\frac{1}{2} \choose n} n!}\)

- Pi function

An alternative notation which was originally introduced by Gauss and which was sometimes used is the pi function, which in terms of the gamma function is

\[\Pi(z) = \Gamma(z+1) = z \Gamma(z) = \int_0^\infty e^{-t} t^z\, dt,\]

For Re(x) > 0 the nth derivative of the gamma function is:

\[\frac{{\rm d}^n}{{\rm d}x^n}\,\Gamma(x) = \int_0^\infty t^{x-1} e^{-t} (\ln t)^{n} dt.\]

- Stirling’s formula

Asymptotically (逐渐地) as t → ∞, the magnitude of the gamma function is given by Stirling’s formula

\[\Gamma(t+1)\sim\sqrt{2\pi t}\left(\frac{t}{e}\right)^{t},\]

where the symbol

~means that the quotient of both sides converges to 1.- Euler’s reflection formula

\[\Gamma(1-z) \Gamma(z) = {\pi \over \sin{(\pi z)}}, \qquad z \not\in \mathbf Z\]

- Duplication formula

\[\Gamma(z) \Gamma\left(z + \tfrac{1}{2}\right) = 2^{1-2z} \; \sqrt{\pi} \; \Gamma(2z).\]

The duplication formula is a special case of the multiplication theorem

\[\prod_{k=0}^{m-1}\Gamma\left(z + \frac{k}{m}\right) = (2 \pi)^{\frac{m-1}{2}} \; m^{\frac{1}{2} - mz} \; \Gamma(mz).\]

- Meromorphic function

In the mathematical field of complex analysis, a meromorphic function on an open subset D of the complex plane is a function that is holomorphic on all D except a set of isolated points (the poles of the function), at each of which the function must have a Laurent series. This terminology comes from the Ancient Greek meros (μέρος), meaning part, as opposed to holos (ὅλος), meaning whole.

- Mellin transform

In mathematics, the Mellin transform is an integral transform that may be regarded as the multiplicative version of the two-sided Laplace transform. This integral transform is closely connected to the theory of Dirichlet series, and is often used in number theory, mathematical statistics, and the theory of asymptotic expansions; it is closely related to the Laplace transform and the Fourier transform, and the theory of the gamma function and allied special functions.

The Mellin transform of a function f is

\[\left\{\mathcal{M}f\right\}(s) = \varphi(s)=\int_0^{\infty} x^{s-1} f(x)dx.\]

The inverse transform is

\[\left\{\mathcal{M}^{-1}\varphi\right\}(x) = f(x)=\frac{1}{2 \pi i} \int_{c-i \infty}^{c+i \infty} x^{-s} \varphi(s)\, ds.\]

refs and see also

- Digamma function

@ 这个定义和这个希腊字母也太贴切了……

In mathematics, the digamma function is defined as the logarithmic derivative of the gamma function:

\[\psi(x)=\frac{d}{dx}\ln\Big(\Gamma(x)\Big)=\frac{\Gamma'(x)}{\Gamma(x)}.\]

It is the first of the polygamma functions.

The digamma function is often denoted as ψ0(x), ψ0(x) or \(\digamma\) (after the archaic Greek letter Ϝ digamma).

Psi (uppercase Ψ, lowercase ψ; Greek: Ψι Psi)

- Relation to harmonic numbers

@ The gamma function obeys the equation

\[\Gamma(z+1)=z\Gamma(z).\]

Taking the derivative with respect to z gives:

\[\Gamma'(z+1)=z\Gamma'(z)+\Gamma(z)\]

Dividing by Γ(z+1) or the equivalent zΓ(z) gives:

\[\frac{\Gamma'(z+1)}{\Gamma(z+1)}=\frac{\Gamma'(z)}{\Gamma(z)}+\frac 1z\]

or:

\[\psi(z+1)=\psi(z)+\frac 1z\]

Since the harmonic numbers are defined as

\[H_n=\sum_{k=1}^n\frac 1k\]

the digamma function is related to it by:

\[\psi(n)=H_{n-1}-\gamma\]

where \(H_n\) is the n-th harmonic number, and γ is the Euler-Mascheroni constant. For half-integer values, it may be expressed as

\[\psi\left(n+{\frac{1}{2}}\right)=-\gamma-2\ln(2)+\sum_{k=1}^n \frac{2}{2k-1}\]

- Euler–Mascheroni constant

@ The Euler–Mascheroni constant (also called Euler’s constant) is a mathematical constant recurring in analysis and number theory, usually denoted by the lowercase Greek letter gamma (γ).

The area of the blue region converges to the Euler–Mascheroni constant.

It is defined as the limiting difference between the harmonic series and the natural logarithm:

\[\begin{align} \gamma &= \lim_{n\to\infty}\left(-\ln n + \sum_{k=1}^n \frac1{k}\right)\\ &=\int_1^\infty\left(\frac1{\lfloor x\rfloor}-\frac1{x}\right)\,dx. \end{align}\]

The numerical value of the Euler–Mascheroni constant, to 50 decimal places, is

0.57721566490153286060651209008240243104215933593992….

- Regularization

The Digamma function appears in the regularization of divergent integrals

\[\int_{0}^{\infty} \frac{dx}{x+a},\]

this integral can be approximated by a divergent general Harmonic series, but the following value can be attached to the series

\[\sum_{n=0}^{\infty} \frac{1}{n+a}= - \psi (a).\]

- Euler–Mascheroni constant

- Integral representations

@ If the real part of x is positive then the digamma function has the following integral representation

\[\psi(x)=\int\limits_0^\infty \left(\frac{e^{-t}}{t}-\frac{e^{-xt}}{1-e^{-t}}\right)dt.\]

- Relation to harmonic numbers

- Beta function

@ In mathematics, the beta function, also called the Euler integral of the first kind, is a special function defined by

\[{\operatorname{Beta}}(x,y) = \int_0^1t^{x-1}(1-t)^{y-1}\,\mathrm{d}t \!\]

for \(\textrm{Re}(x), \textrm{Re}(y) > 0.\,\)

The beta function was studied by Euler and Legendre and was given its name by Jacques Binet; its symbol Β is a Greek capital β rather than the similar Latin capital B.

其实是两个参数控制了形状。这个形状可能是某个 rv 的概率密度。如果 x = y = 1, 于是变成了 0-1 连续均匀分布。因为对称性,a,b 大于 1 的时候,形状是 bell shape,如果 a 更大,整体越右偏。

properties

symmetric: \({\operatorname{Beta}}(x,y) = {\operatorname{Beta}}(y,x). \!\)

relationship between gamma function and beta function: \({\operatorname{Beta}}(x,y)=\dfrac{\Gamma(x)\,\Gamma(y)}{\Gamma(x+y)} \!\)

When x and y are positive integers, it follows from the definition of the gamma function \(\Gamma\) that:

- \({\operatorname{Beta}}(x,y)=\dfrac{(x-1)!\,(y-1)!}{(x+y-1)!} \!\)

- \({\operatorname{Beta}}(x,y) = 2\int_0^{\pi/2}(\sin\theta)^{2x-1}(\cos\theta)^{2y-1}\,\mathrm{d}\theta, \qquad \mathrm{Re}(x)>0,\ \mathrm{Re}(y)>0 \!\)

- \({\operatorname{Beta}}(x,y) = \int_0^\infty\dfrac{t^{x-1}}{(1+t)^{x+y}}\,\mathrm{d}t, \qquad \mathrm{Re}(x)>0,\ \mathrm{Re}(y)>0 \!\)

- \({\operatorname{Beta}}(x,y) = \sum_{n=0}^\infty \dfrac{{n-y \choose n}} {x+n}, \!\)

- \({\operatorname{Beta}}(x,y) = \frac{x+y}{x y} \prod_{n=1}^\infty \left( 1+ \dfrac{x y}{n (x+y+n)}\right)^{-1}, \!\)

The Beta function satisfies several interesting identities, including

- \({\operatorname{Beta}}(x,y) = {\operatorname{Beta}}(x, y+1) + {\operatorname{Beta}}(x+1, y) \!\)

- \({\operatorname{Beta}}(x+1,y) = {\operatorname{Beta}}(x, y) \cdot \dfrac{x}{x+y} \!\)

- \({\operatorname{Beta}}(x,y+1) = {\operatorname{Beta}}(x, y) \cdot \dfrac{y}{x+y} \!\)

- \({\operatorname{Beta}}(x,y)\cdot(t \mapsto t_+^{x+y-1}) = (t \to t_+^{x-1}) * (t \to t_+^{y-1}) \qquad x\ge 1, y\ge 1, \!\)

- \({\operatorname{Beta}}(x,y) \cdot {\operatorname{Beta}}(x+y,1-y) = \dfrac{\pi}{x \sin(\pi y)}, \!\)

where \(t \mapsto t_+^x\) is a truncated power function and the star denotes convolution. The lowermost identity above shows in particular \(\Gamma(\tfrac12) = \sqrt \pi\). Some of these identities, e.g. the trigonometric formula, can be applied to deriving the volume of an n-ball in Cartesian coordinates.

Euler’s integral for the beta function may be converted into an integral over the Pochhammer contour C as

\[\displaystyle (1-e^{2\pi i\alpha})(1-e^{2\pi i\beta}){\operatorname{Beta}}(\alpha,\beta) =\int_C t^{\alpha-1}(1-t)^{\beta-1} \, \mathrm{d}t.\]

- Relationship between gamma function and beta function

@ To derive the integral representation of the beta function, write the product of two factorials as

\[ \begin{align} \Gamma(x)\Gamma(y) &= \int_0^\infty\ e^{-u} u^{x-1}\,\mathrm{d}u \int_0^\infty\ e^{-v} v^{y-1}\,\mathrm{d}v \\[6pt] &=\int_0^\infty\int_0^\infty\ e^{-u-v} u^{x-1}v^{y-1}\,\mathrm{d}u \,\mathrm{d}v. \end{align} \]

Changing variables by u = f(z,t) = zt and v = g(z,t) = z(1-t) shows that this is

\[\begin{align} \Gamma(x)\Gamma(y) &= \int_{z=0}^\infty\int_{t=0}^1 e^{-z} (zt)^{x-1}(z(1-t))^{y-1}|J(z,t)|\,\mathrm{d}t \,\mathrm{d}z \\[6pt] &= \int_{z=0}^\infty\int_{t=0}^1 e^{-z} (zt)^{x-1}(z(1-t))^{y-1}z\,\mathrm{d}t \,\mathrm{d}z \\[6pt] &= \int_{z=0}^\infty e^{-z}z^{x+y-1} \,\mathrm{d}z\int_{t=0}^1t^{x-1}(1-t)^{y-1}\,\mathrm{d}t\\ &=\Gamma(x+y){\operatorname{Beta}}(x,y), \end{align}\]

where |J(z,t)| is the absolute value of the Jacobian determinant of u = f(z,t) and v = g(z,t).

The stated identity may be seen as a particular case of the identity for the integral of a convolution. Taking

\(f(u):=e^{-u} u^{x-1} 1_{{\operatorname{R}}_+}\) and \(g(u):=e^{-u} u^{y-1} 1_{{\operatorname{R}}_+}\), one has:

\[\Gamma(x) \Gamma(y) = \left(\int_{{\operatorname{R}}}f(u)\mathrm{d}u\right) \left( \int_{{\operatorname{R}}} g(u) \mathrm{d}u \right) = \int_{{\operatorname{R}}}(f*g)(u)\mathrm{d}u ={\operatorname{Beta}}(x, y)\,\Gamma(x+y).\]

- Derivatives

@ We have

\[{\partial \over \partial x} \mathrm{B}(x, y) = \mathrm{B}(x, y) \left( {\Gamma'(x) \over \Gamma(x)} - {\Gamma'(x + y) \over \Gamma(x + y)} \right) = \mathrm{B}(x, y) (\psi(x) - \psi(x + y)),\]

where \(\psi(x)\) is the digamma function.

- Approximation

@ Stirling’s approximation gives the asymptotic formula

\[{\operatorname{Beta}}(x,y) \sim \sqrt {2\pi } \frac{{x^{x - \frac{1}{2}} y^{y - \frac{1}{2}} }}{{\left( {x + y} \right)^{x + y - \frac{1}{2}} }}\]

for large x and large y. If on the other hand x is large and y is fixed, then

\[{\operatorname{Beta}}(x,y) \sim \Gamma(y)\,x^{-y}.\]

- Chi-squared distribution

@

Notation \(\chi^2(k)\!\) or \(\chi^2_k\!\) Parameters \(k \in \mathbb{N}_{>0}\) (known as “degrees of freedom”) Support x ∈ [0, +∞) pdf \(\frac{1}{2^{\frac{k}{2}}\Gamma\left(\frac{k}{2}\right)}\; x^{\frac{k}{2}-1} e^{-\frac{x}{2}}\,\) CDF \(\frac{1}{\Gamma\left(\frac{k}{2}\right)}\;\gamma\left(\tfrac{k}{2},\,\frac{x}{2}\right)\) Mean k Median \(\approx k\bigg(1-\frac{2}{9k}\bigg)^3\) Mode max{ k − 2, 0 } Variance 2k Skewness \(\scriptstyle\sqrt{8/k}\,\) Ex. kurtosis 12 / k Entropy \(\begin{align}\tfrac{k}{2}&+\ln(2\Gamma(\tfrac{k}{2})) \\ &\!+(1-\tfrac{k}{2})\psi(\tfrac{k}{2}) \,{\scriptstyle\text{(nats)}} \end{align}\) MGF \((1 − 2 t )−k/2 for t < ½\) CF \((1 − 2 i t )−k/2\) The pdf of the chi-squared distribution is

\[f(x;\,k) = \begin{cases} \frac{x^{(k/2-1)} e^{-x/2}}{2^{k/2} \Gamma\left(\frac{k}{2}\right)}, & x > 0; \\ 0, & \text{otherwise}. \end{cases}\]

where Γ(k/2) denotes the Gamma function, which has closed-form values for integer k.

Its CDF is:

\[F(x;\,k) = \frac{\gamma(\frac{k}{2},\,\frac{x}{2})}{\Gamma(\frac{k}{2})} = P\left(\frac{k}{2},\,\frac{x}{2}\right),\]

where γ(s,t) is the lower incomplete Gamma function and P(s,t) is the regularized Gamma function.

In a special case of k = 2 this function has a simple form:

\[F(x;\,2) = 1 - e^{-\frac{x}{2}}\]

- Student’s t-distribution

@

Parameters \(\nu\) > 0 degrees of freedom (real) Support x ∈ (−∞; +∞) pdf \(\textstyle\frac{\Gamma \left(\frac{\nu+1}{2} \right)} {\sqrt{\nu\pi}\,\Gamma \left(\frac{\nu}{2} \right)} \left(1+\frac{x^2}{\nu} \right)^{-\frac{\nu+1}{2}}\!\) CDF \(\begin{matrix} \frac{1}{2} + x \Gamma \left( \frac{\nu+1}{2} \right) \times\\[0.5em] \frac{\,_2F_1 \left ( \frac{1}{2},\frac{\nu+1}{2};\frac{3}{2}; -\frac{x^2}{\nu} \right)} {\sqrt{\pi\nu}\,\Gamma \left(\frac{\nu}{2}\right)} \end{matrix}\) Mean 0 for \(\nu\) > 1, otherwise undefined Median 0 Mode 0 Variance \(\textstyle\frac{\nu}{\nu-2}\) for \(\nu\) > 2, ∞ for \(1 < \nu \le 2\), otherwise undefined Skewness 0 for \(\nu\) > 3, otherwise undefined Ex. kurtosis \(\textstyle\frac{6}{\nu-4}\) for \(\nu\) > 4, ∞ for \(2 < \nu \le 4\), otherwise undefined Student’s t-distribution has the pdf given by

\[f(t) = \frac{\Gamma(\frac{\nu+1}{2})} {\sqrt{\nu\pi}\,\Gamma(\frac{\nu}{2})} \left(1+\frac{t^2}{\nu} \right)^{\!-\frac{\nu+1}{2}},\!\]

where

- \(\nu\) is the number of degrees of freedom and

- \(\Gamma\) is the gamma function.

This may also be written as

\[f(t) = \frac{1}{\sqrt{\nu}\,\mathrm{B} (\frac{1}{2}, \frac{\nu}{2})} \left(1+\frac{t^2}{\nu} \right)^{\!-\frac{\nu+1}{2}}\!,\]

where B is the Beta function.

For \(\nu\) even,

\[\frac{\Gamma(\frac{\nu+1}{2})} {\sqrt{\nu\pi}\,\Gamma(\frac{\nu}{2})} = \frac{(\nu -1)(\nu -3)\cdots 5 \cdot 3} {2\sqrt{\nu}(\nu -2)(\nu -4)\cdots 4 \cdot 2\,}\cdot\]

For \(\nu\) odd,

\[\frac{\Gamma(\frac{\nu+1}{2})} {\sqrt{\nu\pi}\,\Gamma(\frac{\nu}{2})} = \frac{(\nu -1)(\nu -3)\cdots 4 \cdot 2} {\pi \sqrt{\nu}(\nu -2)(\nu -4)\cdots 5 \cdot 3\,}\cdot\!\]

- F-distribution

@ The F-distribution, also known as Snedecor’s F distribution or the Fisher–Snedecor distribution (after Ronald Fisher and George W. Snedecor) is, in probability theory and statistics, a continuous probability distribution.

The F-distribution arises frequently as the null distribution of a test statistic, most notably in the analysis of variance; see F-test.

If a random variable X has an F-distribution with parameters d1 and d2, we write X ~ F(d1, d2). Then the probability density function (pdf) for X is given by

\[\begin{align} f(x; d_1,d_2) &= \frac{\sqrt{\frac{(d_1\,x)^{d_1}\,\,d_2^{d_2}} {(d_1\,x+d_2)^{d_1+d_2}}}} {x\,\mathrm{B}\!\left(\frac{d_1}{2},\frac{d_2}{2}\right)} \\ &=\frac{1}{\mathrm{B}\!\left(\frac{d_1}{2},\frac{d_2}{2}\right)} \left(\frac{d_1}{d_2}\right)^{\frac{d_1}{2}} x^{\frac{d_1}{2} - 1} \left(1+\frac{d_1}{d_2}\,x\right)^{-\frac{d_1+d_2}{2}} \end{align}\]

for real x ≥ 0. Here \(\mathrm{B}\) is the beta function. In many applications, the parameters d1 and d2 are positive integers, but the distribution is well-defined for positive real values of these parameters.

The cumulative distribution function is

\[F(x; d_1,d_2)=I_{\frac{d_1 x}{d_1 x + d_2}}\left (\tfrac{d_1}{2}, \tfrac{d_2}{2} \right) ,\]

where I is the regularized incomplete beta function.

The expectation, variance, and other details about the F(d1, d2) are given in the sidebox; for d2 > 8, the excess kurtosis is

\[\gamma_2 = 12\frac{d_1(5d_2-22)(d_1+d_2-2)+(d_2-4)(d_2-2)^2}{d_1(d_2-6)(d_2-8)(d_1+d_2-2)}.\]

Parameters d1, d2 > 0 deg. of freedom Support x ∈ [0, +∞) pdf \(\frac{\sqrt{\frac{(d_1\,x)^{d_1}\,\,d_2^{d_2}} {(d_1\,x+d_2)^{d_1+d_2}}}} {x\,\mathrm{B}\!\left(\frac{d_1}{2},\frac{d_2}{2}\right)}\!\) CDF \(I_{\frac{d_1 x}{d_1 x + d_2}} \left(\tfrac{d_1}{2}, \tfrac{d_2}{2} \right)\) - Characterization

@ A random variate of the F-distribution with parameters d1 and d2 arises as the ratio of two appropriately scaled chi-squared variates:

\[X = \frac{U_1/d_1}{U_2/d_2}\]

where

- U1 and U2 have chi-squared distributions with d1 and d2 degrees of freedom respectively, and

- U1 and U2 are independent.

- Characterization

- Marginal distribution

@

Joint and marginal distributions of a pair of discrete, random variables X,Y having nonzero mutual information I(X; Y). The values of the joint distribution are in the 4×4 square, and the values of the marginal distributions are along the right and bottom margins.

The term marginal variable is used to refer to those variables in the subset of variables being retained. These terms are dubbed “marginal” because they used to be found by summing values in a table along rows or columns, and writing the sum in the margins of the table. The distribution of the marginal variables (the marginal distribution) is obtained by marginalizing over the distribution of the variables being discarded, and the discarded variables are said to have been marginalized out.

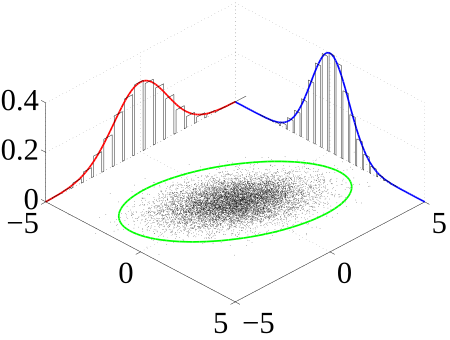

- Joint probability distribution

@

Many sample observations (black) are shown from a joint probability distribution. The marginal densities are shown as well.

The joint probability distribution can be expressed either in terms of a joint cumulative distribution function or in terms of a joint probability density function (in the case of continuous variables) or joint probability mass function (in the case of discrete variables). These in turn can be used to find two other types of distributions: the marginal distribution giving the probabilities for any one of the variables with no reference to any specific ranges of values for the other variables, and the conditional probability distribution giving the probabilities for any subset of the variables conditional on particular values of the remaining variables.

Discrete case

\[\begin{align} \mathrm{P}(X=x\ \mathrm{and}\ Y=y) = \mathrm{P}(Y=y \mid X=x) \cdot \mathrm{P}(X=x) = \mathrm{P}(X=x \mid Y=y) \cdot \mathrm{P}(Y=y) \end{align}\]

Continuous case

\[f_{X,Y}(x,y) = f_{Y\mid X}(y\mid x)f_X(x) = f_{X\mid Y}(x\mid y)f_Y(y)\;\]

Mixed case

\[\begin{align} f_{X,Y}(x,y) = f_{X \mid Y}(x \mid y)\mathrm{P}(Y=y)= \mathrm{P}(Y=y \mid X=x) f_X(x) \end{align}\]

Joint distribution for independent variables

- \(\ P(X = x \ \mbox{and} \ Y = y ) = P( X = x) \cdot P( Y = y)\)

- \(\ f_{X,Y}(x,y) = f_X(x) \cdot f_Y(y)\)

- Sampling (statistics)

@ In statistics, quality assurance, and survey methodology, sampling is concerned with the selection of a subset of individuals from within a statistical population to estimate characteristics of the whole population. Each observation measures one or more properties (such as weight, location, color) of observable bodies distinguished as independent objects or individuals. In survey sampling, weights can be applied to the data to adjust for the sample design, particularly stratified sampling. Results from probability theory and statistical theory are employed to guide the practice. In business and medical research, sampling is widely used for gathering information about a population.

The sampling process comprises several stages:

- Defining the population of concern

- Specifying a sampling frame, a set of items or events possible to measure

- Specifying a sampling method for selecting items or events from the frame